Are you preparing for MuleSoft Certified Developer Certification and looking for some guidelines and material about how to prepare then you have reached the right place. During preparing for MuleSoft Certified Developer Certification, I captured notes. I thought let me share everyone so that it will be beneficial to whoever preparing for the certification. This is the first part of the notes. You can visit the second part of the notes here - How to prepare for MuleSoft Certified Developer Certification - Part II.

|

Item |

Notes |

|

Introducing

application networks and API-led connectivity |

1. Rate of change - the delivery gap has increased over time. 2. Central IT / Line

of Business (LOB) IT - create reusable assets and make them discoverable and

reusable 3. Modern API - discoverable and accessible through self-service. Productized and designed for ease of consumption, secured,

scalable and performance-oriented. 4. API-led

connectivity a. Uses modern API b. Three layers - System APIs,

Process APIs, Experience APIs c. Responsibility - System APIs

-> Central IT (Unlock assets and decentralize) Process APIs

-> LoB IT (Discover, reuse System API and compose) Experience

APIs -> Developers (Discover, self-serve, reuse, and consume process APIs) d. Advantages - reusable,

agile, productive, better governance, speed within the same timeline e. Application network created

using API-led connectivity is a bottom-up approach 5. Center for

enablement (C4E) - cross-functional team called Center Responsibility - promoting consumption

of assets in an organization 6. API - Application

Programming Interface. It has Input, Output, Operation and Data Types Normally referred -> as API

Specifications, a web Service (Implementation), an API Proxy (Controls access

to web service, restrict access and usage through API Gateway) 7. Web Service -

Method of communication between two software i) It has three meanings a. Web Service API (Define how to interact with Web Service) b. Web Service Interface (Provide structure) c. Web Service

Implementation (Actual code) ii) Types - SOAP Based Web

Service, RESTful Web Service iii) REST Web Service methods

- GET, POST, PUT, DELETE etc. 8. RESTful web

service response with status code. Status codes: 200 - OK (GET, DELETE,

PATCH, PUT), 201 - Created (POST), 304 - Not modified (PATCH PUT), 400 - Bad

request (All), 401 - Unauthorized (All), 404 - Resource not found (All), 500

- Server error (All) 9. API Development lifecycle

- a) API specification (design), simulation (create prototype and make

available to consumer) , validation (output - API specification/contract) 10. System

- MuleSoft API-Led connectivity layer is intended to expose part of the backend

without business logic. 11. Mulesoft is an application network is used - to create reusable APIs and assets designed to be

consumed by other business units. 12. Center for

Enablement - creates and manages discoverable assets to be consumed byline of

business developers 13. Modern API - is

designed first using an API Specification for rapid feedback 14. 'PUT'

HTTP method in RESTful web service is used to replace an existing resource. |

|

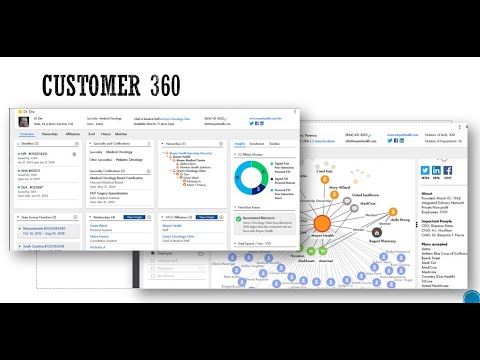

Introducing

Anypoint Platform |

1. Anypoint Platform

- design, build, deploy and manage 2. Major components: Design center -

(Rapid development) Design API Exchange -

(Collaboration) Discoverable, accessible through self-service Management center -

(Visibility and control) Security, scalability, performance 3. Anypoint platform

is used by Specialist, Admin, Ops,

DevOps, Ad-hoc integrators, App developers 4. Supported

platforms On-Premises, Private Cloud,

Cloud Service Providers, Hosted By Mulesoft (CloudHub), Hybrid 5. Benefits of

API-led connectivity Speedy delivery, actionable

visibility, secure, future proof, intentional self-service 6. API Specification

phase tools - API Designer, API Console and mocking service, Exchange, API

Portal, API notebook --> output - Validated API Specification in RAML 7. Build or

Implementation Phase tools -> Anypoint Studio, Munit 8. API Management

Phase tools - API Manager, API Analytics, Runtime Manager, Visualizer 9. Troubleshooting

and scaling - Runtime manager, API manager 10. Design center -

To create Integration applications, API Specification, and API Fragments Flow designer - Web app to

connect systems and consume APIs API Designer - Web app to

design, document, mocking APIs Anypoint Studio - IDE to

implement APIs and Build integration applications 11. Mule Applications

can be created using Flow Designer or Anypoint Studio or writing code (XML) Mule Runtime environment

decouples point-to-point integration. It also enforces policies for API

governance 12. Mule applications accept and process a Mule event through multiple Mule event processors. All these plugged together in a flow. Flow is the only thing is

executed in the Mule application. Flow has three areas - Source,

Process area, Error handling 13. Mule cloudhub

worker - is a dedicated instance of a mule which runs a single application 14: Mule event is the data structure has below components Mules Message Attributes -

metadata (headers and parameters) Payload -

actual data Variables - declared using

processors within the flow 15. Flow designer is

used to design and develop a fully functional Mule application in a hosted

environment 16. Deployed flow

designer application run in CloudHub worker 17. Anypoint exchange

is used to publish, share and search APIs 18. Using the design center we cannot create API Portals |

|

Designing APIs |

1. API Design

approaches - Hand Coding, Apiary (API Blueprint), Swagger (Open API

Specification), RAML 2. RAML used to

auto-generate documentation, mock endpoints, create interfaces for API

Specification 3. RAML Contains

nodes and facets Resources are nodes. Start

with / facets are special configurations applied to

resources 4. RAML code can be

modularized using Data Types, examples, traits,

resource types, overlays, extensions, security, schemas, documentation,

annotations and libraries 5. Fragments can be

stored In files and folders within a

project In a separate API fragment

project in the Design center In a separate RAML fragment in

Exchange 6. As an anonymous

user, we can make calls to an API instance that uses the mocking service but

not managed APIs. 7. In order make API

discoverable we need to publish it to Anypoint Exchange |

|

Building APIs |

1. Mule event source

initiates the execution of the flow 2. Mule event

processors transform, filter, enrich and process the event data 3. Variables which

are part of Mule event are referenced by processors 4. Mule flow contains

- Source, Process, and Error Handling Source - optional Process - required Error handling - optional 5. Default data

responded in java format. Transform component is used to convert java to JSON

format using DataWave 6. A RESTful

interface for an application will have listeners for each resource method 7. We can create the

interface either manually or generated from API definition 8. APIKit is the open-source toolkit comes with Anypoint studio and used to generate interface

based on the RAML API definition. Generates main routing flow

and flows for each API resource The generated interface can be

hooked implementation logic APIKit creates a separate flow

for each HTTP method APIkit router is used to

validates requests against RAML API Specification and routes to API

implementation 9. Anypoint platform uses GIT for version control which internally uses pull, push, and merges operations for code edits. |