Are you looking for an article about how to prepare a checklist for Informatica MDM Installation? You might have gone through the Informatica MDM Installation guide and must be facing issues from where to start. The best way to start is nothing preparing checklist. In this article, we will see what are the main topics to consider for check and we will also see a sample checklist file.

Introduction

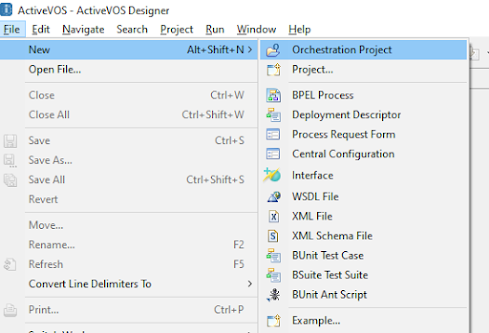

After going through more than 100 pages in the Installation guide, I realized that we need to prepare a checklist for each component of Informatica MDM. Informatica MDM comes with Hub Server, Process Server, Informatica Data Director Configuration Manager, Provisioning Tool, Business Process Management i.e. Active VOS, Elastic Search, Application Server (such as Jboss, WebSphere, Weblogic), Database (such as SQL Server, DB2, Oracle). Each of these components has a separate set of instructions. Sometimes we get lost or overwhelmed with all these instructions. So take a long breath and start documenting the main section as like mentioned in our next section

Components to consider for installation

The number of components that are needed for installation is based on the business needs. However, there few components are commonly required irrespective of business need e.g. Process Server, Hub Server. So first document all possible components which you are planning to install. Here is a sample list of components.

Informatica MDM Installation checklist

The checklist contains details about each of the components which are captured in the previous section. e.g. Create the MDM Hub Master Database section will have details about database name, server name, port, and credentials. You can access the complete checklist here.

For reference, the checklist will look like as below