Introduction

In today's data-driven world, organizations face the ever-increasing challenge of efficiently managing and integrating data across various applications, platforms, and systems. The Informatica Intelligent Data Management Cloud (IDMC) offers a comprehensive solution to this challenge by providing a powerful platform for seamless data integration, transformation, and management. In this article, we will explore the key features and benefits of Informatica IDMC in the context of application integration.

The Significance of Application Integration

Application integration is the process of connecting and aligning various software applications within an organization to ensure the seamless flow of data and business processes. Effective application integration is vital for enabling data-driven decision-making, enhancing productivity, and ensuring a superior customer experience. However, achieving successful application integration can be complex due to the heterogeneity of applications, data formats, and protocols.

Informatica IDMC: A Holistic Solution

Informatica IDMC is a cloud-based data management and integration platform designed to address these challenges. It offers a wide range of features and capabilities that make application integration efficient, secure, and scalable.

- Unified Platform: Informatica IDMC provides a single, unified platform for integrating data across various applications, databases, and cloud services. This centralized approach simplifies integration efforts, reduces complexity, and accelerates time-to-value.

- Pre-built Connectors: The platform includes a vast library of pre-built connectors and adapters that enable seamless integration with popular applications, databases, and services, such as Salesforce, SAP, AWS, and more. These connectors significantly reduce development efforts and time required for integration projects.

- Data Transformation and Quality: Informatica IDMC offers powerful data transformation and quality tools, ensuring that data is standardized, cleansed, and enriched as it flows through the integration process. This enhances data accuracy and reliability.

- Security and Compliance: Security is paramount in data integration. Informatica IDMC provides robust security measures, including data encryption, access control, and auditing capabilities, to protect sensitive information. It also supports compliance with data privacy regulations like GDPR and CCPA.

- Scalability: As organizations grow, their data integration needs evolve. Informatica IDMC scales with your business, ensuring that you can handle increased data volumes and complexity without a significant overhaul of your integration infrastructure.

- Monitoring and Governance: Informatica IDMC offers comprehensive monitoring and governance tools that provide real-time visibility into integration processes, allowing for quick issue resolution and better decision-making.

Benefits of Using Informatica IDMC for Application Integration

- Enhanced Productivity: Informatica IDMC simplifies the integration process by offering a user-friendly interface and pre-built connectors. This reduces development time and resources, allowing your IT teams to focus on strategic tasks.

- Improved Data Quality: With data transformation and quality tools, Informatica IDMC ensures that data remains consistent and reliable throughout the integration process, leading to more accurate insights and decisions.

- Cost Efficiency: By streamlining integration and reducing the need for custom coding, IDMC helps lower the total cost of ownership for data integration projects.

- Faster Time-to-Market: The platform's pre-built connectors and tools enable organizations to bring new applications and services to market faster, gaining a competitive edge.

- Scalability: Informatica IDMC ensures that your integration infrastructure can adapt to growing data requirements, reducing the need for frequent system overhauls.

- Compliance and Data Security: By adhering to data privacy regulations and offering robust security measures, Informatica IDMC helps organizations avoid compliance issues and data breaches.

Informatica IDMC is a versatile and powerful platform that simplifies application integration by offering a unified, cloud-based solution. It not only streamlines integration but also enhances data quality, security, and governance. With its scalability and cost-efficiency, IDMC is an invaluable tool for organizations looking to thrive in the data-driven landscape. Whether you're a small business or a large enterprise, Informatica IDMC can help you harness the full potential of your data and drive success in your digital transformation journey.

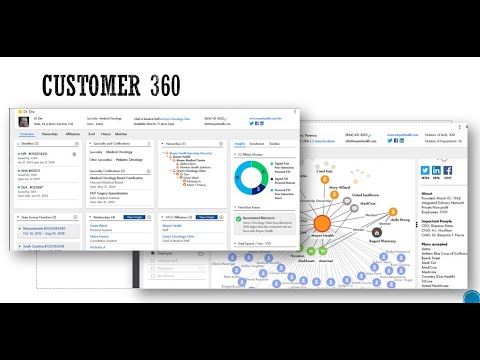

Learn more about Informatica IDMC and Customer 360 here